Director’s Cut – Visual Attention Analysis in Cinematic VR Content

19th September 2018

In this page, we are sharing the Director’s cut database that we hope will enable in creating more immersive virtual reality experience for 360° film. This page contains materials and related papers for the Director’s cut research.

Abstract

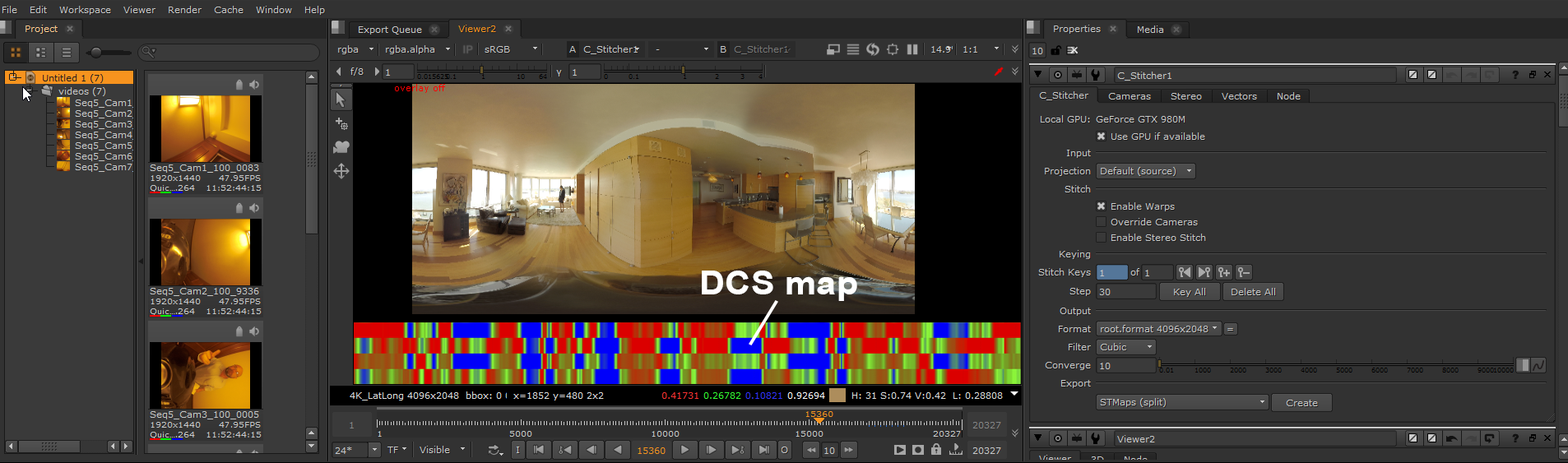

Methods of storytelling in cinema have well established conventions that have been built over the course of its history and the development of the format. In 360° film, many of the techniques that have formed part of this cinematic language or visual narrative are not easily applied or are not applicable due to the nature of the format i.e. not contained the border of the screen. In this paper, we analyze how end-users view 360° video in the presence of directional cues and evaluate if they are able to follow the actual story of narrative 360° films. We first let filmmakers create an intended scan-path, the so-called director’s cut, by setting position markers in the equirectangular representation of the omnidirectional content for eight short 360° films. Alongside this, the filmmakers provided additional information regarding directional cues and plot points. Then, we performed a subjective test with 20 participants watching the films with a head-mounted display and recorded the center position of the viewports. The resulting scan-paths of the participants are then compared against the director’s cut using different scan-path similarity measures. In order to better visualize the similarity between the scan-paths, we introduce a new metric which measures and visualizes the viewport overlap between the participants’ scan-paths and the director’s cut. Finally, the entire dataset, i.e. the director’s cuts including the directional cues and plot points as well as the scan-paths of the test subjects, is publicly available with this paper.

Downloads

Please cite our papers in your publication if it helps your research:

@inproceedings{Knorr2018,

title = {Director's Cut - A Combined Dataset for Visual Attention Analysis in

Cinematic VR Content},

author = {Sebastian Knorr and Cagri Ozcinar and Colm O Fearghail and Aljosa Smolic},

year = {2018},

booktitle = {The 15th ACM SIGGRAPH European Conference on Visual Media Production}

}

@inproceedings{Fearghail2018,

title = {Director's Cut - Analysis of Aspects of Interactive Storytelling

for VR Films},

author = {Colm O Fearghail and Cagri Ozcinar and Sebastian Knorr and Aljosa Smolic},

year = {2018},

booktitle = {International Conference for Interactive Digital Storytelling

(ICIDS) 2018}

}

@inproceedings{Fearghail2018v2,

title = {Director's Cut - Analysis of VR Film Cuts for Interactive Storytelling},

author = {Colm O Fearghail and Cagri Ozcinar and Sebastian Knorr and Aljosa Smolic},

year = {2018},

booktitle = {International Conference on 3D Immersion (IC3D) 2018}

}

Acknowledgement

We would like to thank the VR filmmakers Angus Cameron, Soenke Kirchhof, Josef Kluger, Declan Dowling and Jack Morrow for fruitful discussions, their great support by providing the Director’s Cuts for their VR films and for their feedback to develop the DCS maps.

This publication has emanated from research conducted with the financial support of Science Foundation Ireland (SFI) under the Grant Number 15/RP/2776.

Contact

If you have any question, send an e-mail to ofearghc@scss.tcd.ie or ozcinarc@scss.tcd.ie or sebastian.knorr@gmx.de