Sharpness Mismatch Detection in Stereoscopic Content with 360-Degree Capability

31st October 2018

This page is dedicated to the paper about sharpness mismatch detection for stereoscopic omnidirectional images that was published at ICIP in 2018. Here, you can find the dataset mentioned in the paper with 95 stereoscopic omnidirectional images together with their visual attention maps.

Abstract

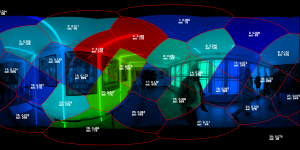

This paper presents a novel sharpness mismatch detection method for stereoscopic images based on the comparison of edge width histograms of the left and right view. The new method is evaluated on the LIVE 3D Phase II and Ningbo 3D Phase I datasets and compared with two state-of-the-art methods. Experimental results show that the new method highly correlates with user scores of subjective tests and that it outperforms the current state-of-the-art. We then extend the method to stereoscopic omnidirectional images by partitioning the images into patches using a spherical Voronoi diagram. Furthermore, we integrate visual attention data into the detection process in order to weight sharpness mismatch according to the likelihood of its appearance in the viewport of the end-user’s virtual reality device. For obtaining visual attention data, we performed a subjective experiment with 17 test subjects and 96 stereoscopic omnidirectional images. The entire dataset including the viewport trajectory data and resulting visual attention maps are publicly available with this paper.

Downloads

Please cite our papers in your publication if it helps your research:

@inproceedings{Croci2018b,

title = {Sharpness Mismatch Detection in Stereoscopic Content with 360-Degree Capability},

author = {Simone Croci and Sebastian Knorr and Aljosa Smolic},

year = {2018},

booktitle = {IEEE International Conference on Image Processing}

}

Acknowledgement

This publication has emanated from research conducted with the financial support of Science Foundation Ireland (SFI) under the Grant Number 15/RP/2776.

Contact

If you have any question, send an e-mail to crocis@scss.tcd.ie or sebastian.knorr@gmx.de