Autonomous Tracking For Volumetric Video Sequences

3rd December 2020

Abstract

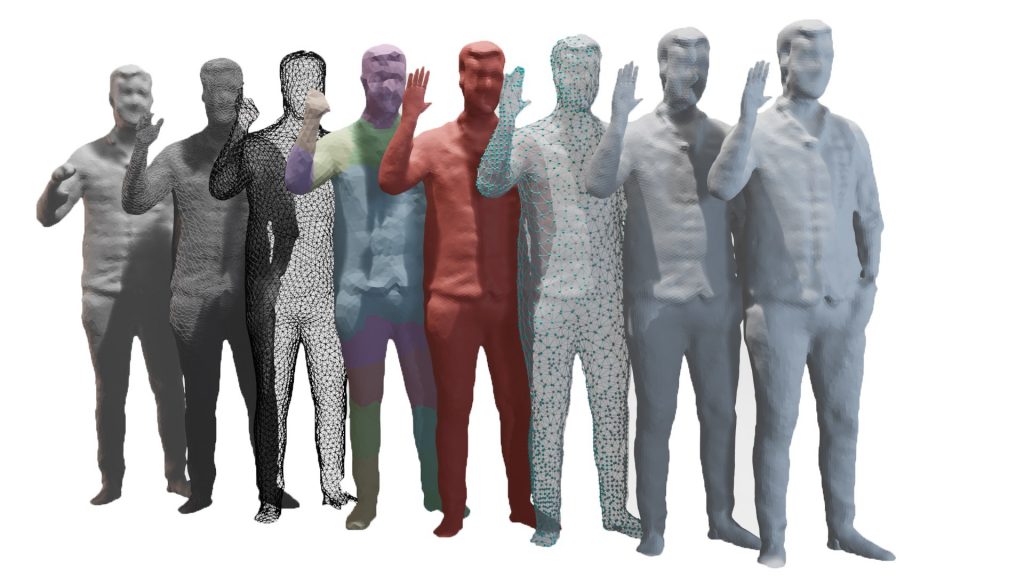

As a rapidly growing medium, volumetric video is gaining attention beyond academia, reaching industry and creative communities alike. This brings new challenges to reduce the barrier to entry from a technical and economical point of view. We present a system for robustly and autonomously performing temporally coherent tracking for volumetric sequences, specifically targeting those from sparse setups or with noisy output. Our system will detect and recover missing pertinent geometry across highly incoherent sequences as well as provide users the option of propagating drastic topology edits. In this way, affordable multi-view setups can leverage temporal consistency to reduce processing and compression overheads while also generating more aesthetically pleasing volumetric sequences.

Publication

Autonomous Tracking For Volumetric Video Sequences, Matthew Moynihan, Susana Ruano, Rafael Pagés and Aljosa Smolic, WACV 2021