Depth Map Estimation from Light Field Images

7th July 2017

Abstract

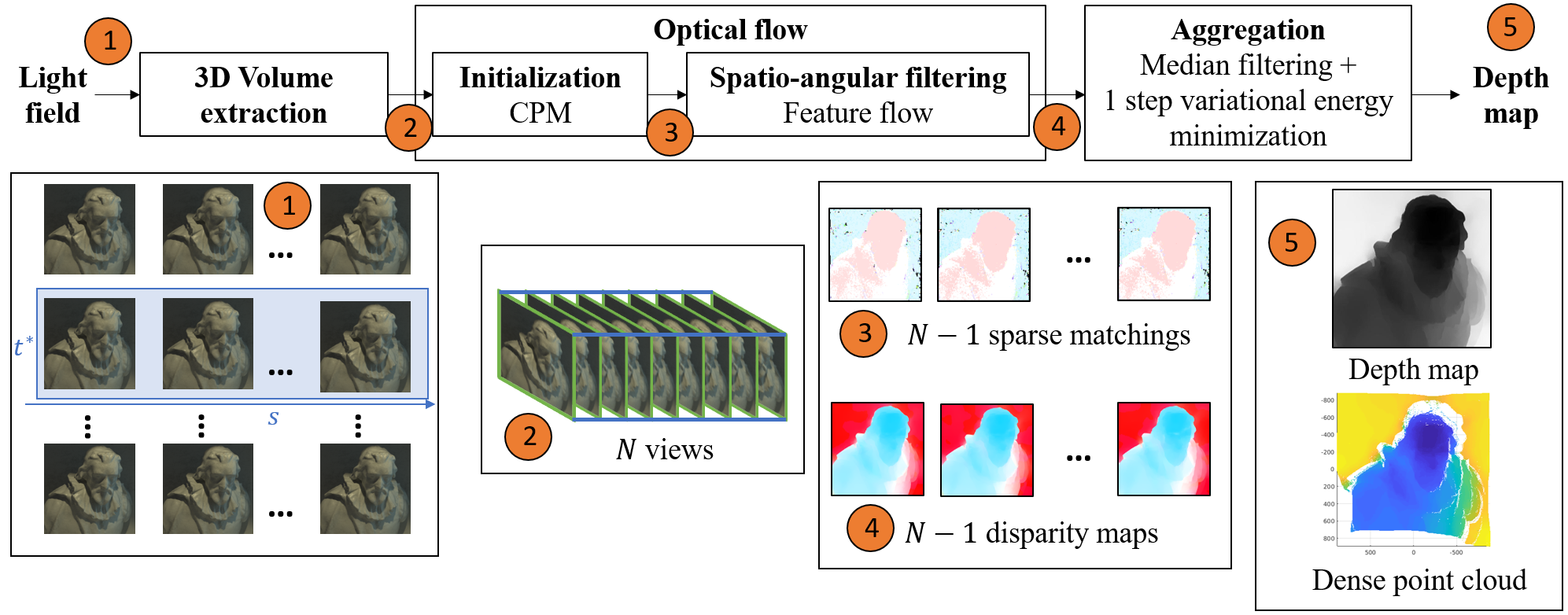

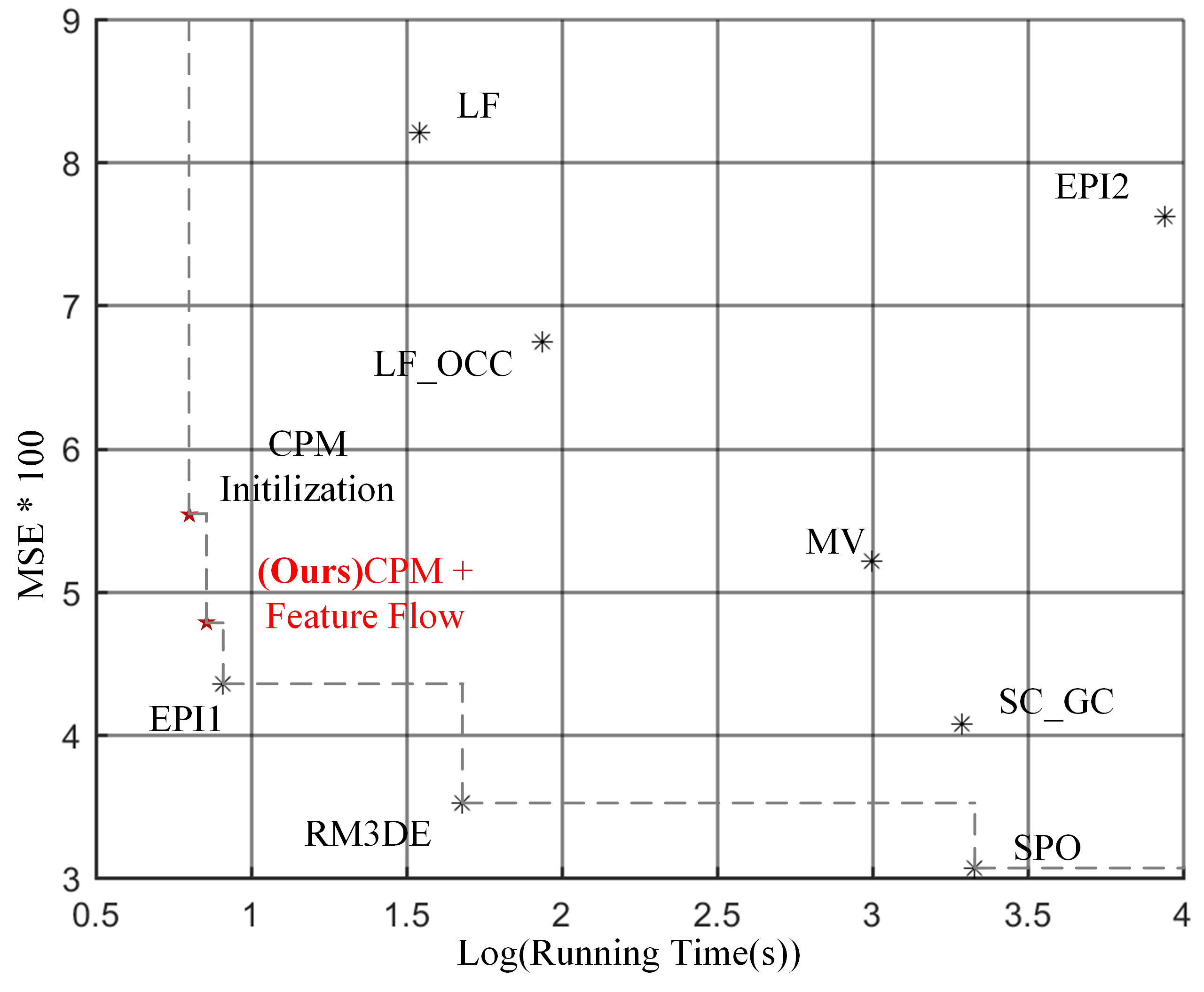

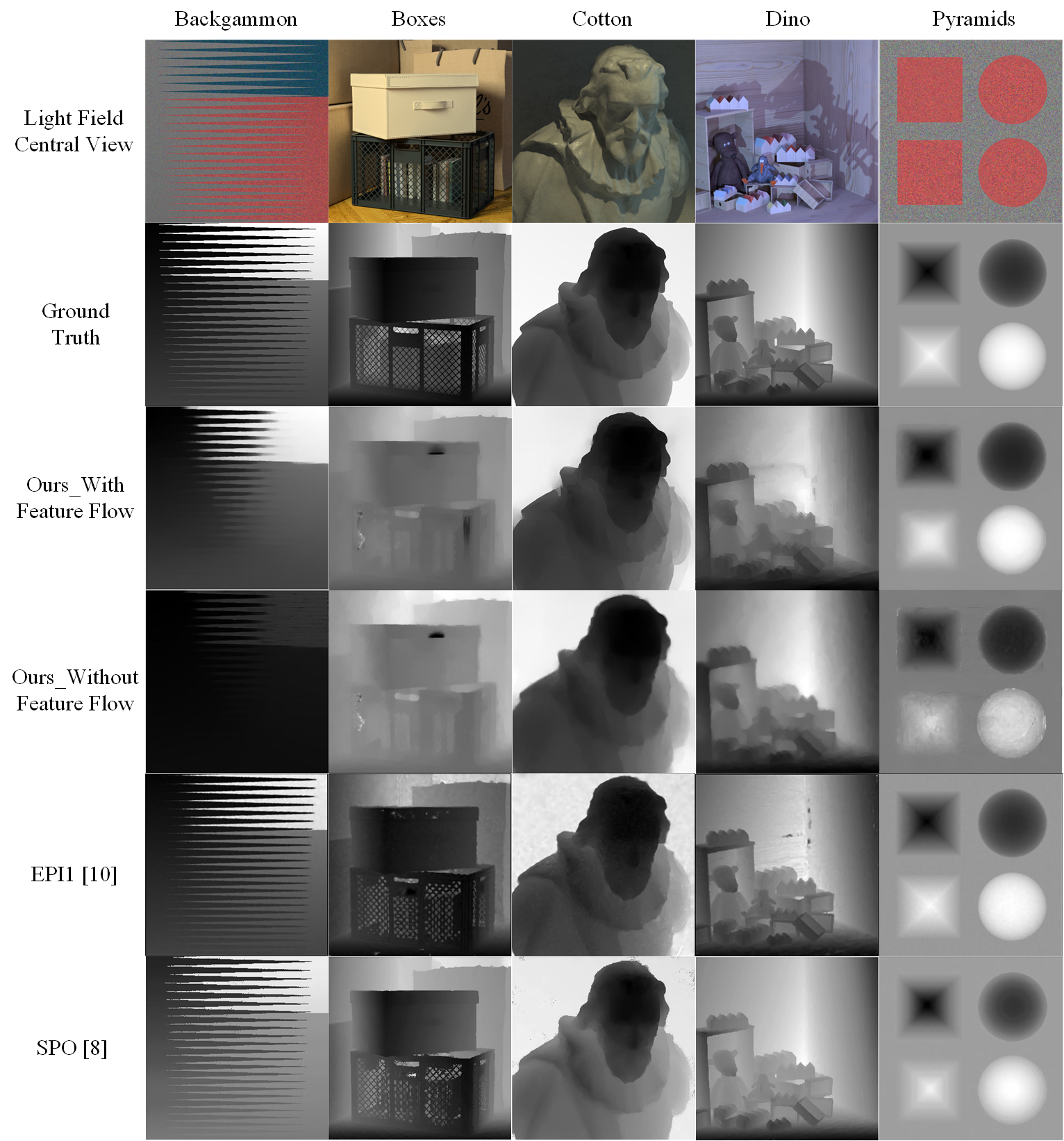

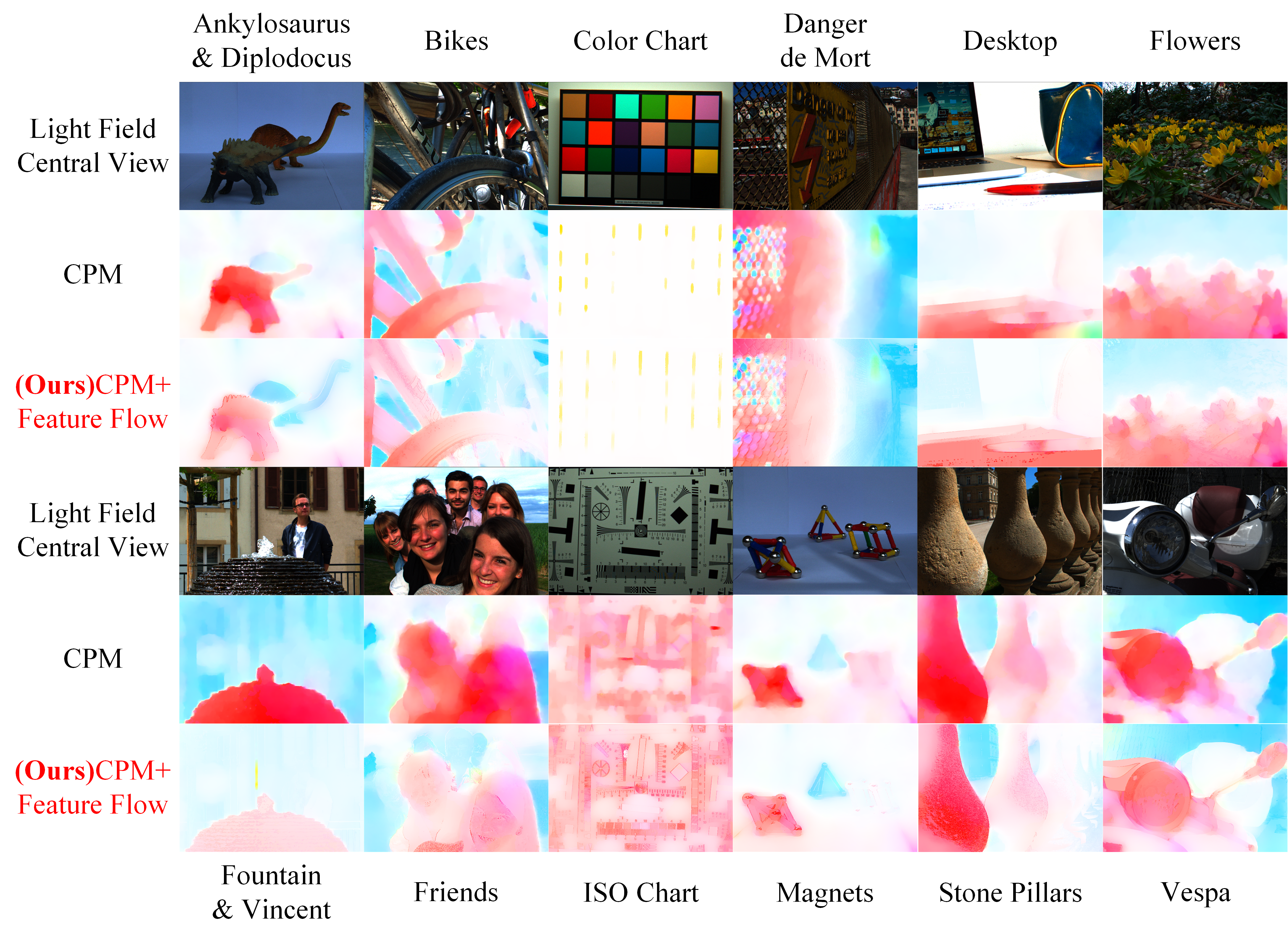

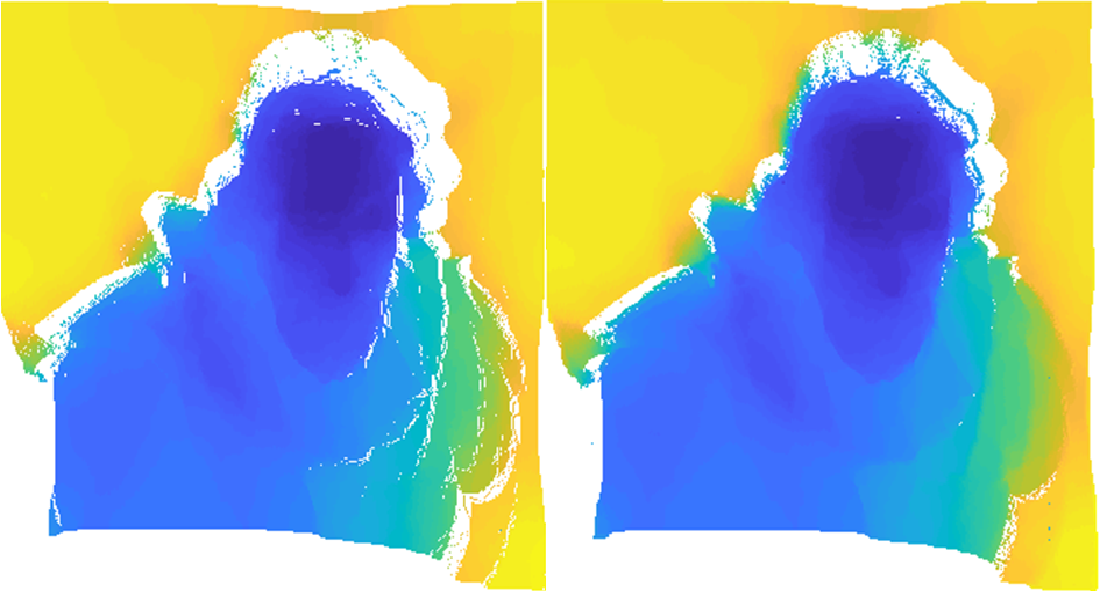

Depth map estimation is a crucial task in computer vision, and new approaches have recently emerged taking advantage of light fields, as this new imaging modality captures much more information about the angular direction of light rays compared to common approaches based on stereoscopic images or multi-view. We propose a novel depth estimation method from light fields based on existing optical flow estimation methods. The optical flow estimator is applied on a sequence of images taken along an angular dimension of the light field, which produces several disparity map estimates. Considering both accuracy and efficiency, we choose the feature flow method as our optical flow estimator. Thanks to its spatio-temporal edge-aware filtering properties, the different disparity map estimates that we obtain are very consistent, which allows a fast and simple aggregation step to create a single disparity map, which can then converted into a depth map. Since the disparity map estimates are consistent, we can also create a depth map from each disparity estimate, and then aggregate the different depth maps in the 3D space to create a single dense depth map.

This paper received the Jonathan Campbell Best Paper Award at The Irish Machine Vision and Image Processing Conference, 2017.