Super-resolution of Omnidirectional Images Using Adversarial Learning

12th August 2019Abstract

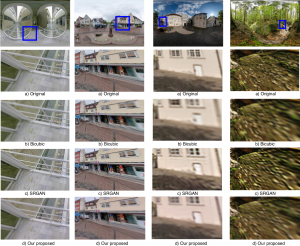

An omnidirectional image (ODI) enables viewers to look in every direction from a fixed point through a headmounted display providing an immersive experience compared to that of a standard image. Designing immersive virtual reality systems with ODIs is challenging as they require high resolution content. In this paper, we study super-resolution for ODIs and propose an improved generative adversarial network based model which is optimized to handle the artifacts obtained in the spherical observational space. Specifically, we propose to use a fast PatchGAN discriminator, as it needs fewer parameters and improves the super-resolution at a fine scale. We also explore the generative models with adversarial learning by introducing a spherical-content specific loss function, called 360-SS. To train and test the performance of our proposed model we prepare a dataset of 4500 ODIs. Our results demonstrate the efficacy of the proposed method and identify new challenges in ODI super-resolution for future investigations.

Results

Downloads

Citation

Paper accepted in IEEE 21st International Workshop on Multimedia Signal Processing (MMSP 2019)

Please cite our paper in your publications if it helps your research:

@inproceedings{mmspsr2019,

title = {Super-resolution of Omnidirectional Images Using Adversarial Learning},

author = {Cagri Ozcinar and Aakanksha Rana and Aljosa Smolic},

year = {2019},

booktitle = {IEEE 21st International Workshop on Multimedia Signal Processing (MMSP 2019)}

}

Acknowledgment

This publication has emanated from research conducted with the financial support of Science Foundation Ireland (SFI) under the Grant Number 15/RP/2776.

Contact

If you have any question, send an e-mail at c.ozcinar@tcd.ie or ranaa@scss.tcd.ie

Publication

Super-resolution of Omnidirectional Images Using Adversarial Learning, Cagri Ozcinar*, Aakanksha Rana*, and Aljosa Smolic. In IEEE MMSP 2019.