Generating Ambisonics Using Audio-Visual Cue for Virtual Reality

2nd October 2019

Proposed by Aakanksha Rana and Cagri Ozcinar Email: ranaa at scss.tcd.ie or ozcinarc at scss.tcd.ie

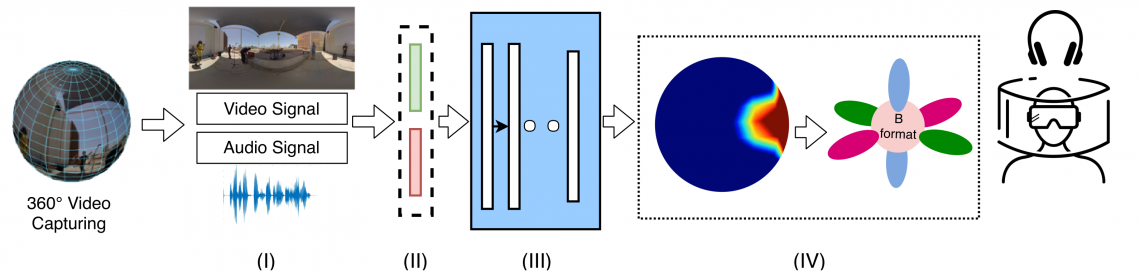

Multimodal representations for Augmented/Virtual Reality are the next step in multimedia technology, providing a more immersive experience than ever before (e.g., Facebook’s purchase of Kickstarter Company Oculus). Essentially, creating realistic VR experiences requires 360 video to be captured with spatial audio. The spatial aspect of sound plays a significant role in informing the viewers about the location of objects in the 360 environments, providing an immersive multimedia experience. In practice, however, existing affordable 360 cameras can capture the visual scene either with mono or stereo audio signals. As a consequence, such multimodal content is incapable of creating a fully immersive sense of “being there” in the VR environment. In fact, recent user studies have amplified the need for spatial audio to achieve presence in a VR setting. In this context, this project will propose an end-to-end solution, from multimodal content representation, to develop a platform for mono-to-ambisonic conversion using audio-visual cues. The proposed solution will target to understand, learn and predict the sound locations using the visual and audio signal information, and leverage on this knowledge to redesign a real-time VR pipeline for an immersive VR experience.

References:

https://v-sense.scss.tcd.ie:443/wp-content/uploads/2019/02/ICASSP2019_multimodal.pdf

https://papers.nips.cc/paper/7319-self-supervised-generation-of-spatial-audio-for-360-video.pdf

Requirement:

Basic understanding of Deep-learning,

Strong Python programming skills with knowledge of Pytorch/TensorFlow tools

The ideal candidates should have an interest in VR and must have the ability to learn new tools and knowledge.