Deep learning based Generative Inpainting for VR

18th October 2018

Proposed by Aakanksha Rana and Cagri Ozcinar

Email: ranaa at scss.tcd.ie or ozcinarc at scss.tcd.ie

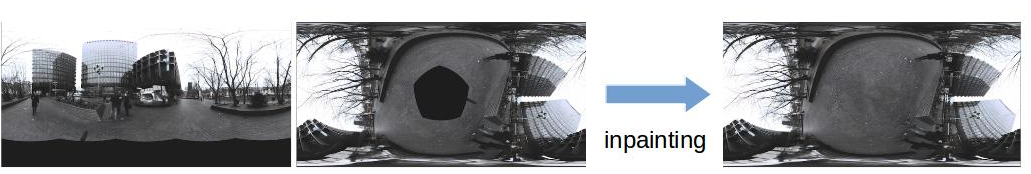

Due to its interactive look-around consumption nature, omnidirectional image (ODI), also called 360-degree, is an increasingly popular image representation. While the topics of ODI capturing and compression are well understood, editing of ODI poses many challenges. In this project, an automatic inpainting approach that utilizes deep network architecture will be developed to solve the problem of inpainting such as missing holes, unwanted objects removals (e.g., stitching errors, cameraman, tripods) in ODI content. The underlying idea is to design an adversarial learning framework to learn a structured loss where each output (predicted) pixel of that is conditionally dependent on one or more neighboring pixels in the input image.

Keywords: ODI, Inpainting, GANs, Deep Learning.

References

MacQuarrie, A. and Steed, A. Object Removal in Panoramic Media, ACM SIGGRAPH European Conference on VIsual Media Production (CVMP), 2017.http://discovery.ucl.ac.uk/1471316/1/submission_no_cr.pdf

Manual editing tools http://ashblagdon.com/affinity-photo-inpainting-tutorial/

Iizuka, S. and Simo-Serra, E. and Ishikawa, H. Globally and locally consistent image completion, Siggraph 2017

http://hi.cs.waseda.ac.jp/~iizuka/projects/completion/data/completion_sig2017.pdf

Yeh, R. A. and Chen, C. and Lim, T. Y. Semantic Image Inpainting with Deep Generative Models, CVPR 2017. http://openaccess.thecvf.com/content_cvpr_2017/papers/Yeh_Semantic_Image_Inpainting_CVPR_2017_paper.pdf