Audio-visual attention modeling using deep-learning for VR

4th June 2019

Proposed by Aakanksha Rana and Cagri Ozcinar Email: ranaa at scss.tcd.ie or ozcinarc at scss.tcd.ie

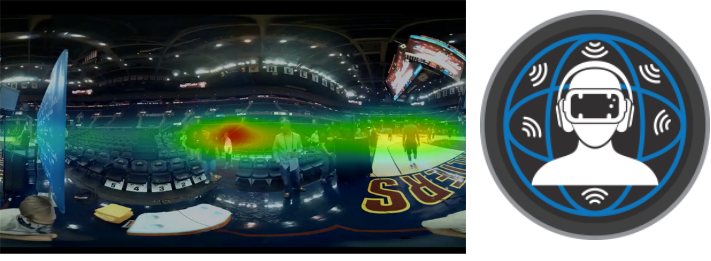

With recent interest in increased immersion, huge advancements have been made in VR technology in academia as well as industry, including major players such as Facebook/Oculus, HTC, or Samsung. Learning from the audio-visual information has attracted a lot of interest for bringing a true sense of ‘being there’ in VR. In this project, the objective is to develop multimodal attention methods for VR video to automatically understand what captures user’s attention. To this end, audio-visual cues will be investigated, and a new deep learning model will be developed to predict visual attention in omnidirectional content.

Requirement:

Basic understanding of Deep-learning,

Strong Python programming skills with knowledge of Pytorch/tensorflow tools

Idea candidate should have interest in VR and must have the ability to learn new tools and knowledge.