Mobile Video Color Transfer Project

27th March 2020

For the creation of online visual media, various multimedia software products can be used. Although these editing tasks were previously carried out by artists using software applications such as Adobe Photoshop and Premiere Pro, recent improvements in the affordances of mobile devices and high-speed mobile data networks mean that these editing capabilities are more readily available for mobile devices enabling a broader consumer-base. However, the precise role of the user in creative practice is often neglected in favor of reporting faster, more streamlined device functionality. In this project, using a prototype mobile color transfer application, we seek to identify high-level human-computer interaction issues concerning video recoloring interfaces that are driven by the needs of different user-types via a methodological and explorative process. In this webpage, we provide a collection of additional information for our recent papers:

- M. Grogan*, E. Zerman*, G. W. Young*, A. Smolic. “A pilot study on video color transfer: A survey of user-type opinions.”, in 14th International Conference on Interfaces and Human Computer Interaction, July 2020, Zagreb, Croatia.

- M. Grogan*, E. Zerman*, G. W. Young*, A. Smolic. “A Case Study on Video Color Transfer: Exploring User Motivations, Expectations, and Satisfaction.”, arXiv:2007.08948 [cs.HC], 2020.

Mobile Video Color Transfer Application

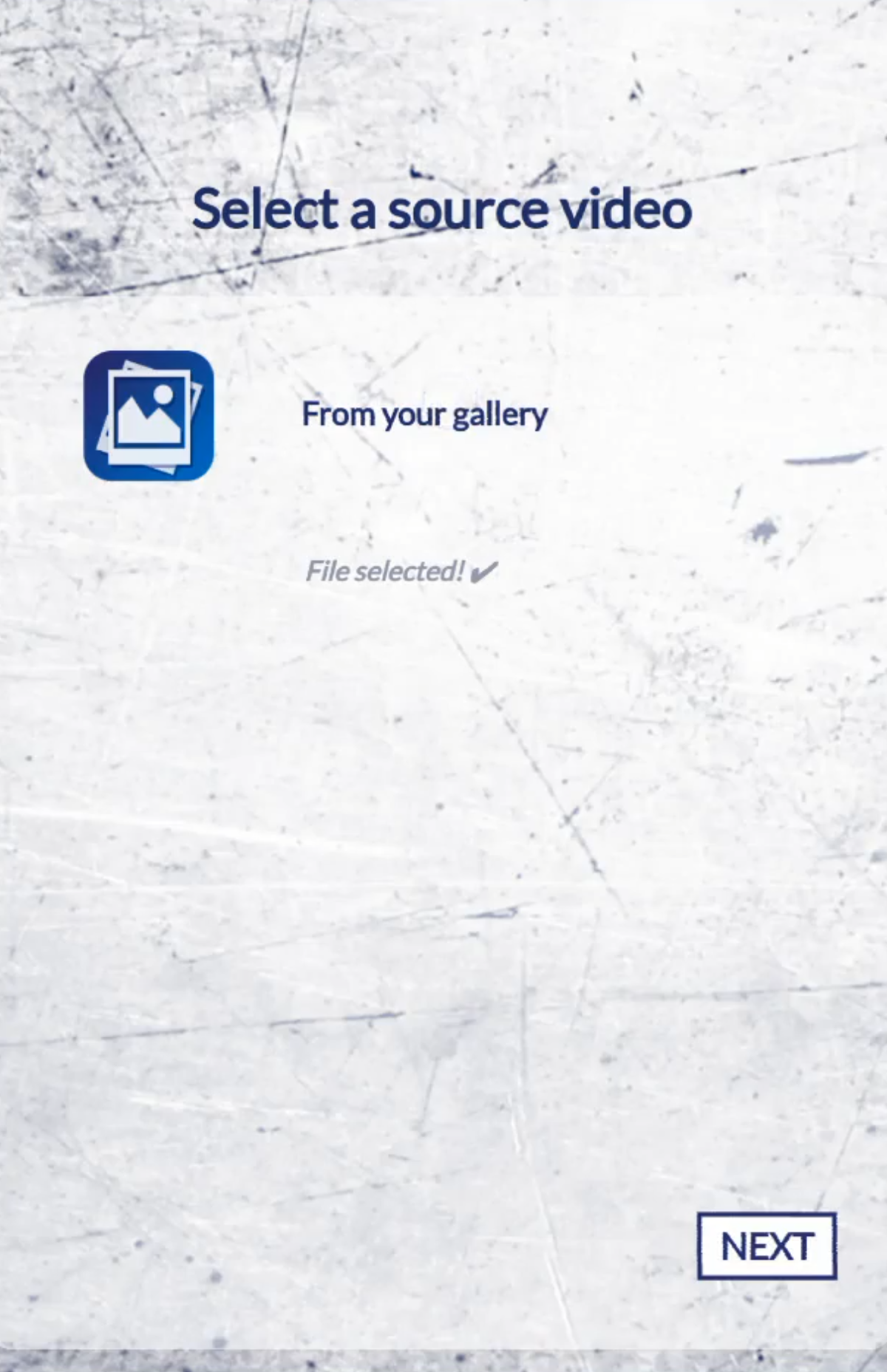

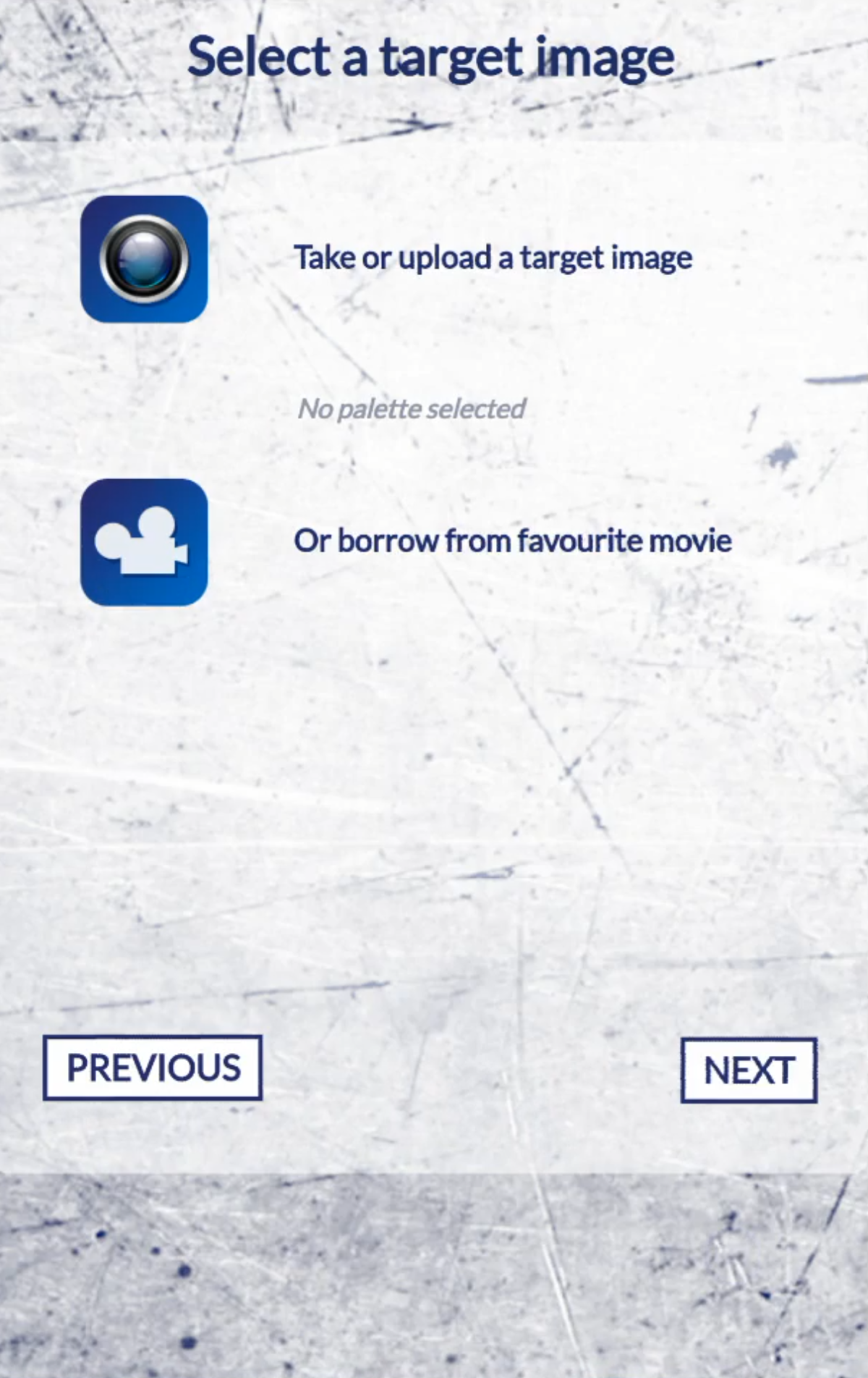

For this study, we develop a prototype mobile video color transfer application for devices (e.g. smartphones and tablets) with Android operating system, as described in the paper. This application lets user select the source video and target color palette from pre-selected options (see below). For this pilot study, the prototype was developed only to enable participants to carry out the color transfer operation on mobile devices. Therefore, the selected video and palette are uploaded to a dedicated server, where the color transfer operation is completed. The resulting video is downloaded to the device after the process is done. The user interface can be seen in the table below:

|

|

|

|

|

| Video selection | Target palette selection | Target palettes | Preview screen | Result screen |

Selection of the Source Videos

The source videos were selected from stock video footage websites, for example https://coverr.co/, https://pexels.com/, and https://pixabay.com/. For the replicability of this study, we selected videos with free-to-use or public domain licenses. 16 source videos (see below) were selected with consideration to various qualitative and production-related parameters, such as the type of focus, camera movement, and semantics. Specifically, consideration was given to three common mobile phone camera use scenarios: Personal (i.e., similar to personal recordings which are taken to remember a moment), Professional (i.e., similar to those who are captured for institutions or businesses), and Touristic. These parameters are reported in the table below, including a link to their source. As the videos’ durations were all different only the first 10 seconds of each video was taken to avoid disparities caused by these variations in processing duration. Therefore, the source videos were all 10 seconds in duration.

| Name | Snapshot | Video | Theme | Speed | Type of Focus | Camera Movement | Link |

| Balloon |  |

Touristic | Realtime | All-in-focus | Camera in hand | [Link] | |

| Beach |  |

Professional | Realtime | All-in-focus | Drone | [Link] | |

| Church |  |

Touristic | Realtime | All-in-focus | Tripod | [Link] | |

| City |  |

Professional | Time Lapse | All-in-focus | Still | [Link] | |

| Concert |  |

Personal | Slow Motion | All-in-focus | Camera in hand | [Link] | |

| Dance |  |

Touristic | Realtime | All-in-focus | Tripod | [Link] | |

| Flower |  |

Personal | Realtime | Shallow DoF | Camera in hand | [Link] | |

| Kick |  |

Personal | Realtime | All-in-focus | Camera in hand | [Link] | |

| Meeting |  |

Professional | Realtime | Focused | Camera in hand | [Link] | |

| Road |  |

Personal | Realtime | All-in-focus | Camera in hand | [Link] | |

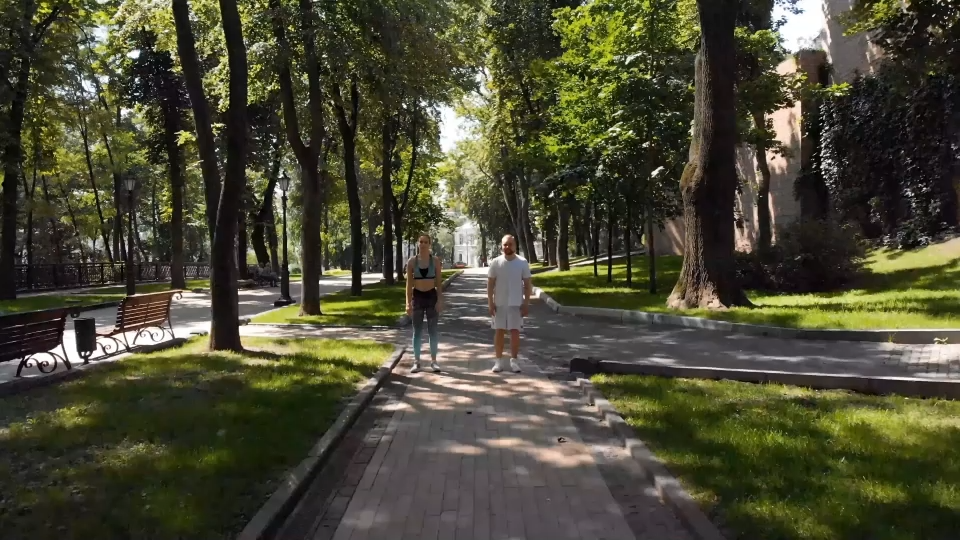

| Run |  |

Professional | Realtime | All-in-focus | Drone | [Link] | |

| Ski |  |

Personal | Slow motion | All-in-focus | Tripod | [Link] | |

| Sky |  |

Personal | Realtime | All-in-focus | Still | [Link] | |

| Street |  |

Touristic | Realtime | All-in-focus | Still | [Link] | |

| Temple |  |

Touristic | Realtime | Out of focus | Camera in hand | [Link] | |

| Town |  |

Professional | Realtime | All-in-focus | Drone | [Link] |

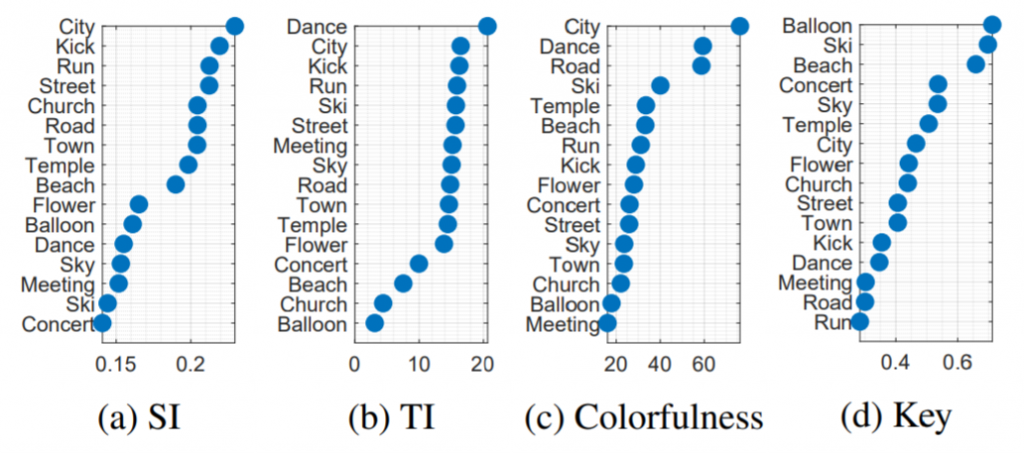

Aside from the parameters reported in the table, we also predetermined that the selected videos would have various quantitative (see the figure below) parameters which would ensure variation over the considered feature space, such as Spatial Perceptual Information (SI) and Temporal Perceptual Information (TI) (ITU-T Rec. P.910), colorfulness (Hasler and Susstrunk, 2013), and key (Valenzise et al., 2014). The results in figure below show that the selected source videos have different features that cover a wide range of scales.

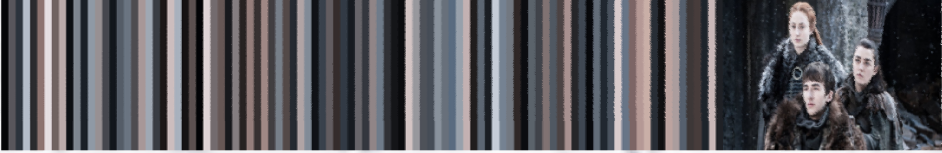

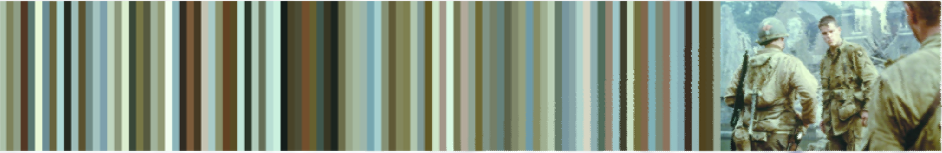

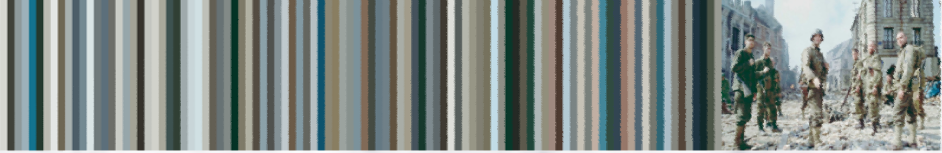

Selection of the Target Palettes

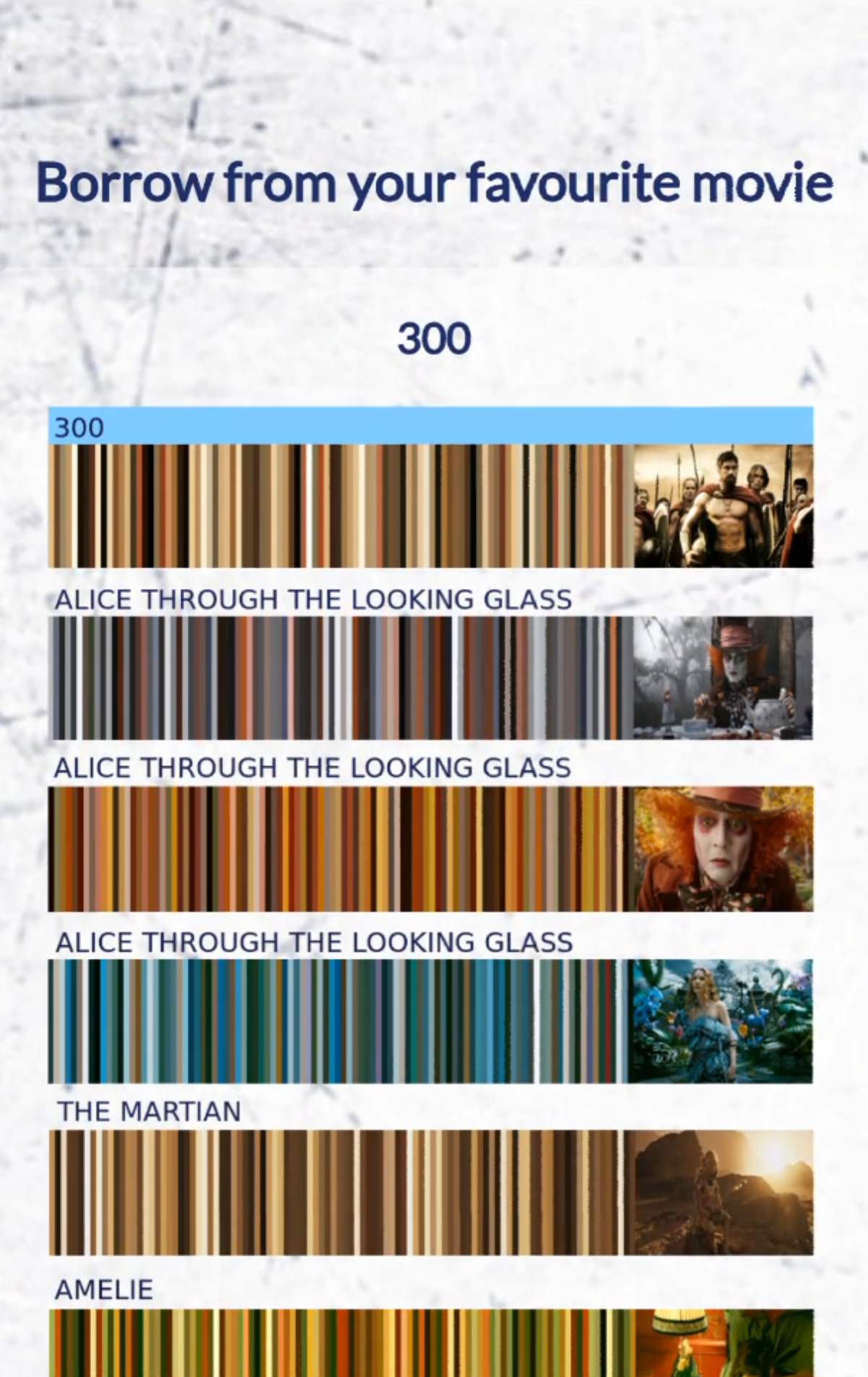

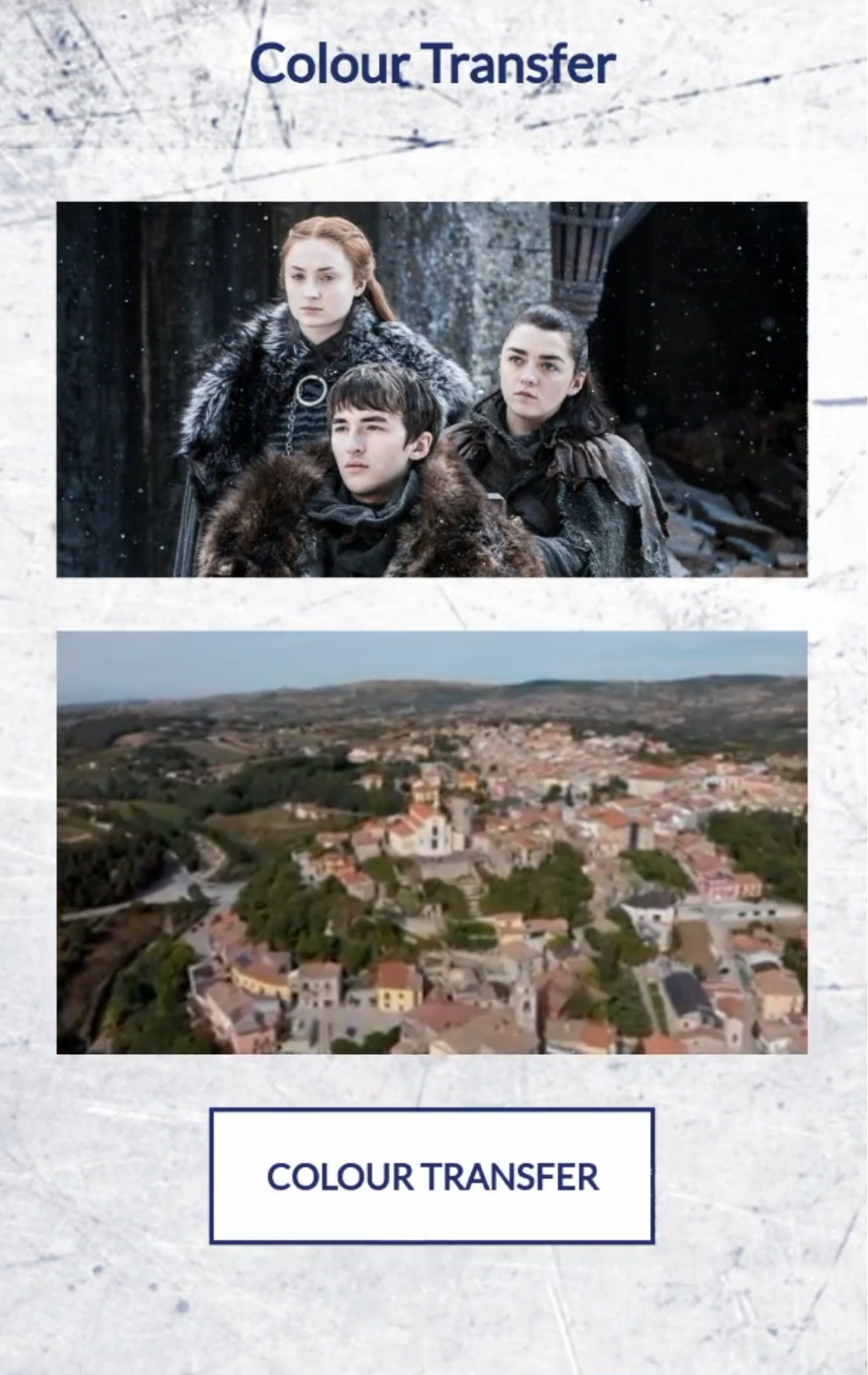

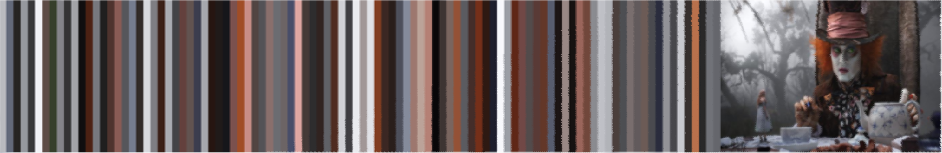

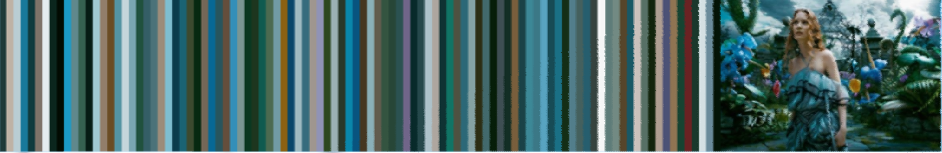

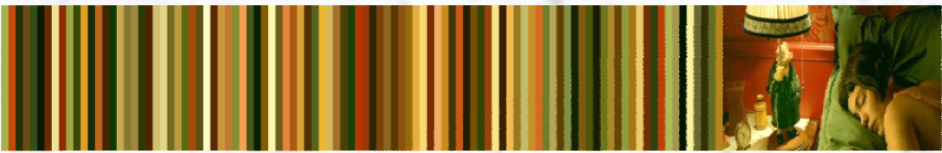

The target palettes were selected with consideration to the color distributions of the color palette representation of the presented (see table below). These color palettes were selected as they have various color themes and various color combinations. Additionally, these color palettes were selected among movies that had specialized pipelines for color grading to convey a specific feeling or mood to the viewer.

|

|

|

| 300 | Alice 1 | Alice 2 |

|

|

|

| Alice 3 | Amelie | Game of Thrones |

|

|

|

| Kung Fu Panda | Martian | Saving Pr. Ryan 1 |

|

|

|

| Saving Pr. Ryan 2 | The Revenant | Theory of Everything |

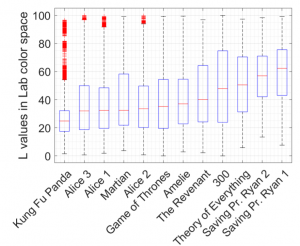

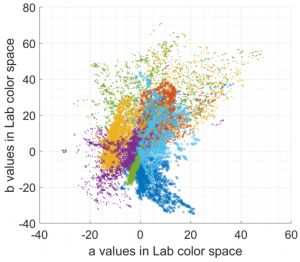

To analyze the color distribution, we looked at two distinct parameters:

- Variation of the brightness of the pixels in the target image

- Variation in the chroma values of the striped color palettes attached.

For this purpose, the colors were converted from red-gree-blue (RGB) to CIE Lab color space (Robertson, 1976). In the CIE Lab color space, L describes the luma of the pixels whereas a and b describes the chroma of each pixel. To find out the variation of the brightness of the pixels in the target image, the L values found in the target images (i.e., the screenshot frame in the target color palette) are drawn in a boxplot, see the figure below. As can be seen from these boxplots, the target images had varying levels of brightness.

To analyze the variation in the chrominance values of the striped color palettes, we plotted the distribution of a and b values in CIE Lab color space, see the figure below, where each different color and marker combination corresponded to a different color palette. As can be seen from this plot, each of these color palettes has a different color combination which provides users the option to choose from the different color themes. In application, the users have the choice to add other target images from their image gallery. However, to keep the parameters controlled in this study, we only allowed users to select from these predefined 12 target color palettes.

Additional Results

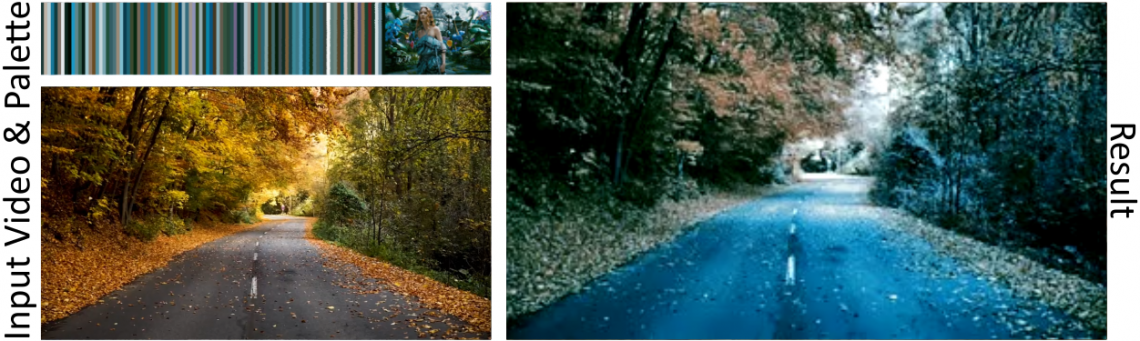

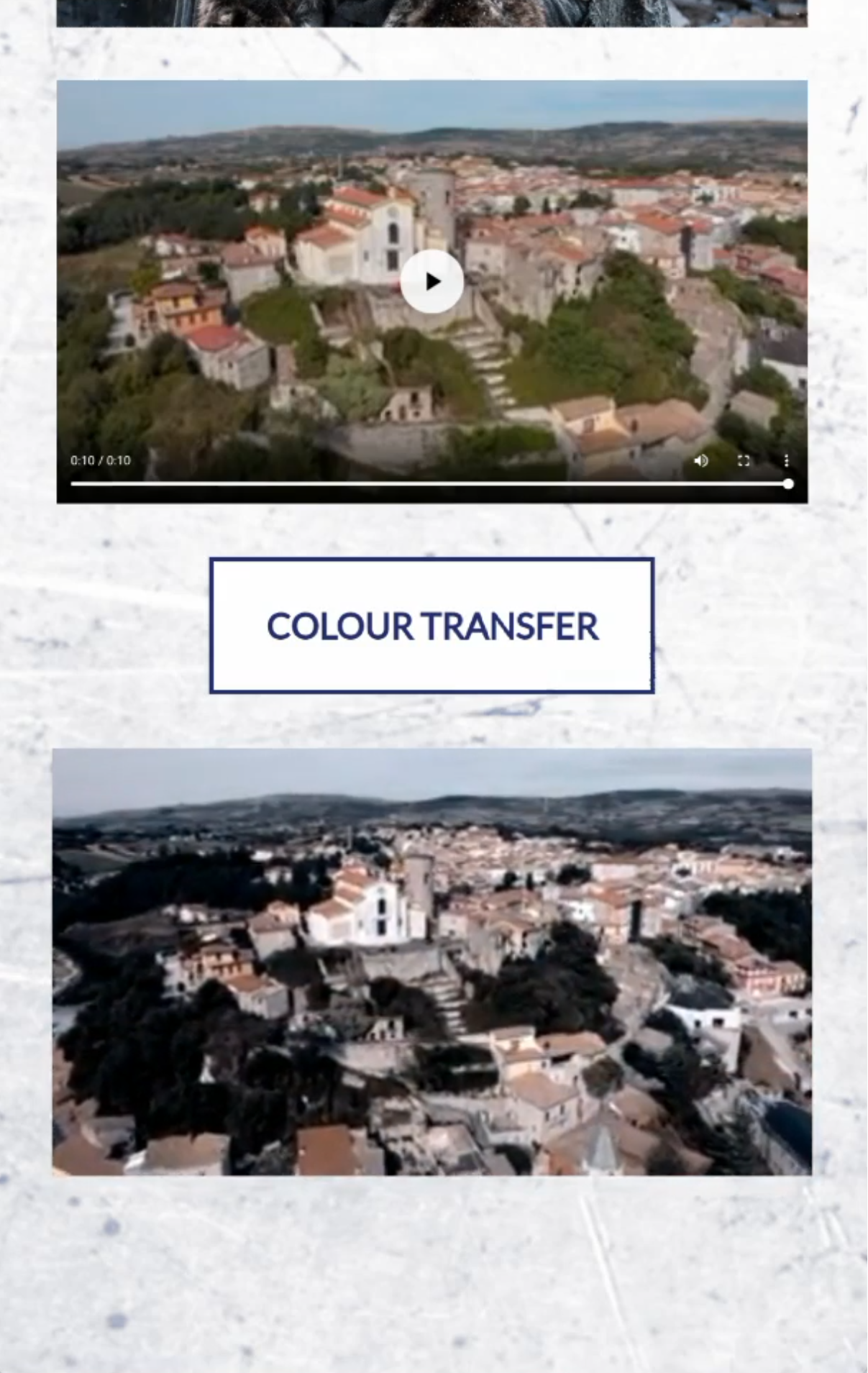

In this part, we provide you with additional color transfer results to give a better idea for the mobile video color transfer. These visual results show how different combinations of source videos and target palettes would result, see the table below.

| Source Video Name | Source Video | Target Palette Name | Target Palette | Resulting Video |

| Balloon | Amelie |  |

||

| Concert | Kung Fu Panda |  |

||

| Dance | Kung Fu Panda |  |

||

| Kick | Amelie |  |

||

| Road | Alice 3 |  |

||

| Road | Martian |  |

||

| Run | The Revenant |  |

Acknowledgement

This publication has emanated from research conducted with the financial support of Science Foundation Ireland (SFI) under the Grant Number 15/RP/2776.

Contact

If you have any questions, send an e-mail to mgrogan@tcd.ie or zermane@scss.tcd.ie or youngga@tcd.ie.