Using light fields to enable deep monocular depth estimation

12th October 2020Proposed by: Martin Alain – alainm at scss.tcd.ie

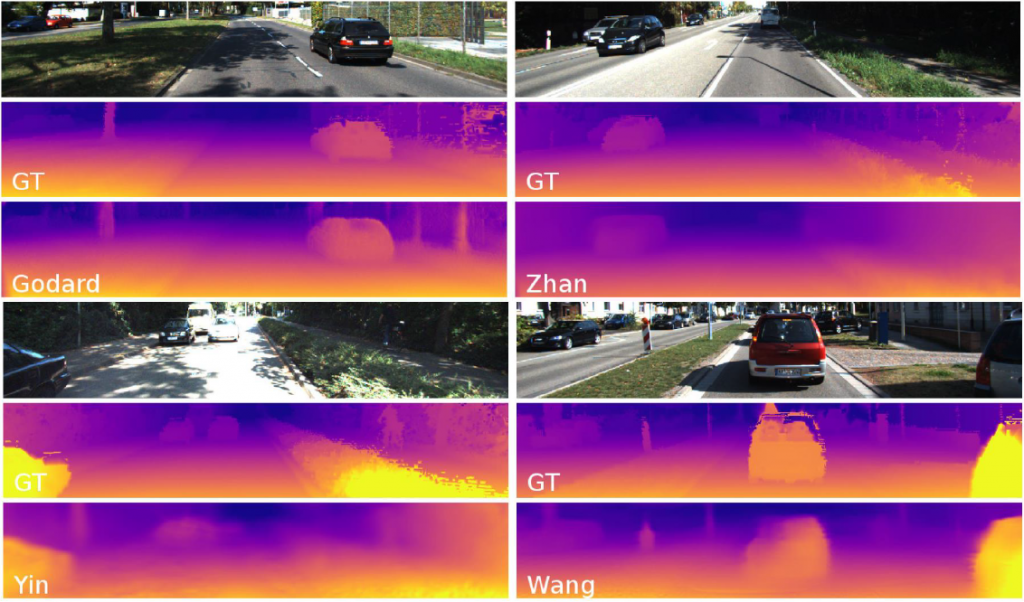

Estimating the depth of a scene from image data is one of the core task in computer vision. While traditional methods usually rely on stereoscopic or multi-view images, recent advances in deep learning have enabled to obtain high quality depth maps from a single image only [1-4], often referred to as monocular depth estimation. Recent work has also demonstrated that the defocus blur due to the limited depth-of-field of some cameras can also be used as cue to improve the depth estimation from a single image [5-7].

The goal of this project is to study monocular depth estimation using light field data. Compared to traditional 2D imaging systems which capture the spatial intensity of the light rays, the 4D light fields also contain the angular direction of light rays, and usually consists in a collection of 2D images arranged on a 2D grid. One of the flagship application of light fields is the ability for the user to generate refocused images while controlling both the placement of the focus plane as well as the amount of defocus blur [8]. Thus, we propose in this project to study the performance of existing monocular depth estimation, depending notably on the depth of the refocus plane and the intensity of the defocus blur. In addition, the (re-)training of deep network for monocular depth estimation using light fields will be explored. Conveniently, synthetic light field dataset are available [9,10], allowing to perform objective evaluations. Note that while depth estimation from light field is also an ongoing research topic, this project only focuses on depth estimation from a single image.

[1] Saxena, Ashutosh, Sung H. Chung, and Andrew Y. Ng. "Learning depth from single monocular images." Advances in neural information processing systems. 2006. [2] Chen, Weifeng, et al. "Single-image depth perception in the wild." Advances in neural information processing systems. 2016. [3] Khan, Faisal, Saqib Salahuddin, and Hossein Javidnia. "Deep Learning-Based Monocular Depth Estimation Methods—A State-of-the-Art Review." Sensors 20.8 (2020): 2272. [4] Zhao, Chaoqiang, et al. "Monocular depth estimation based on deep learning: An overview." Science China Technological Sciences (2020): 1-16. [5] Anwar, Saeed, Zeeshan Hayder, and Fatih Porikli. "Depth Estimation and Blur Removal from a Single Out-of-focus Image." BMVC. Vol. 1. 2017. [6] Carvalho, Marcela, et al. "Deep Depth from Defocus: how can defocus blur improve 3D estimation using dense neural networks?." Proceedings of the European Conference on Computer Vision (ECCV). 2018. [7] Lee, Junyong, et al. "Deep defocus map estimation using domain adaptation." Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2019. [8] Alain, Martin, Weston Aenchbacher, and Aljosa Smolic. "Interactive light field tilt-shift refocus with generalized shift-and-sum." arXiv preprint arXiv:1910.04699 (2019). [9] Honauer, Katrin, et al. "A dataset and evaluation methodology for depth estimation on 4d light fields." Asian Conference on Computer Vision. Springer, Cham, 2016. [10] Shi, Jinglei, Xiaoran Jiang, and Christine Guillemot. "A framework for learning depth from a flexible subset of dense and sparse light field views." IEEE Transactions on Image Processing 28.12 (2019): 5867-5880.

Related links: https://v-sense.scss.tcd.ie:443/research/tilt-shift/ https://lightfield-analysis.uni-konstanz.de/ http://clim.inria.fr/Datasets/InriaSynLF/index.html