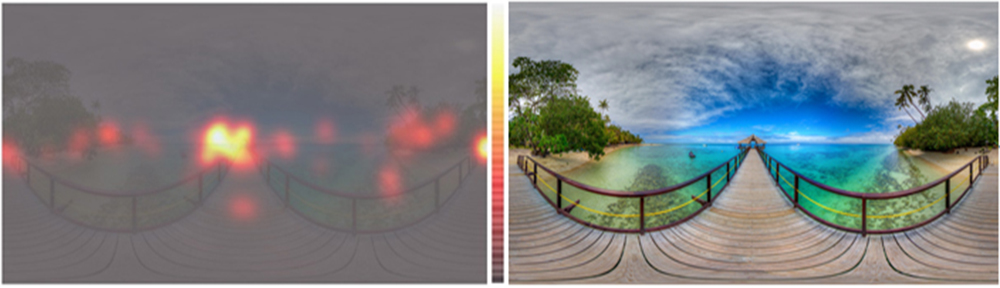

Demo: Visual Attention for Omnidirectional Images in VR Applications

17th May 2017

Overview:

Understanding visual attention has always been a topic of great interest in different research communities. This is particularly important in omnidirectional images (ODIs) viewed with a head-mounted display (HMD), where only a fraction of the captured scene is displayed at a time, namely viewport.

Here, we share a demo that displays a set of ODIs (provided by the user or using the ones available), while it collects the viewport’s center position at every animation frame for each ODI. The data collected is automatically downloaded at the end of the session.

https://www.scss.tcd.ie/~ozcinarc/Testbed/