Aesthetic Image Captioning from Weakly-Labelled Photographs

26th August 2019Abstract

Aesthetic image captioning (AIC) refers to the multi-modal task of generating critical textual feedbacks for photographs. While in natural image captioning (NIC), deep models are trained in an end-to-end manner using large curated datasets such as MS-COCO, no such large-scale, clean dataset exists for AIC. Towards this goal, we propose an automatic cleaning strategy to create a benchmarking AIC dataset, by exploiting the images and noisy comments easily available from photography websites. We propose a probabilistic caption-filtering method for cleaning the noisy web-data, and compile a large-scale, clean dataset ‘AVA-Captions’, ( ?230, 000 images with ?5 captions per image). Additionally, by exploiting the latent associations between aesthetic attributes, we propose a strategy for training a convolutional neural network (CNN) based visual feature extractor, typically the first component of an AIC frame-work. The strategy is weakly supervised and can be effectively used to learn rich aesthetic representations, without requiring expensive ground-truth annotations. We finally showcase a thorough analysis of the proposed contributions using automatic metrics and subjective evaluations.

(a) (b)

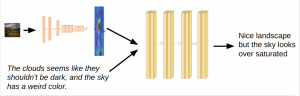

(a) Weakly-supervised training of the CNN. Images and comments are provided as input. The image is fed to the CNN and the comment is fed to the inferred topic model. The topicmodel predicts a distribution over the topics which is used as a label for computing the loss for the CNN.

(b) Training the LSTM. Visual features extracted using the CNN and the comment is fed as an input to the LSTM whichpredicts a candidate caption.

Downloads

Citation

@inproceedings{Ghosal_iccv2019,

title = {Aesthetic Image Captioning from Weakly-Labelled Photographs},

author = {Koustav Ghosal and Aakanksha Rana and Aljosa Smolic.},

year = {2019},

date = {2019-10-27},

booktitle = {ICCV 2019 Workshop on Cross-Modal Learning in Real World},

pubstate = {forthcoming},

tppubtype = {inproceedings}

}

ACKNOWLEDGEMENT

This publication has emanated from research conducted with the financial support of Science Foundation Ireland (SFI) under the Grant Number 15/RP/2776.

CONTACT

If you have any question, send an e-mail to ghosalk@scss.tcd.ie