Neural style transfer for videos

18th October 2018Proposed by Pierre Matysiak and Mairéad Grogan

Email : matysiap at scss.tcd.ie or mgrogan at tcd.ie

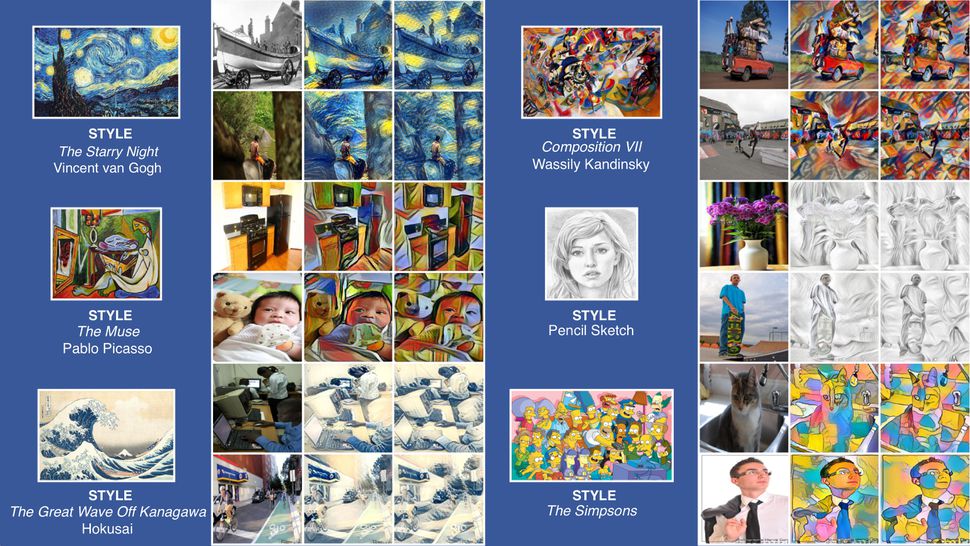

Style transfer is an image manipulation technique where you try to apply the style of an image, typically a painting or any other kind of artwork, to another image of your choice. This technique is already well-established for single images, and several methods using convolutional neural networks (CNNs) provide impressive results. The concept has also birthed feature-length movies, such as Loving Vincent (link) where each frame resembles the painting style of famous painter Vincent Van Gogh. However, for this movie, each frame was hand painted such that temporal consistency was ensured, and the flow of the video was pleasant to watch.

In this project, we wish to give the ability to achieve such results automatically. The aim of this project is to provide some experience working with neural networks, whose popularity make it a very important tool to understand. The focus on the work would be to test and choose the proper network architecture for this particular project. In addition, some research will need to be done on finding methods to ensure temporal and spatial consistency between the frames, so that the output videos do not suffer from exaggerated flickering effects. The student will be expected to perform a literature review of the field, and is welcome to think of any additional functionality he or she wishes to add to this project, beyond the core application.

Keywords : style transfer, deep learning, CNNs, GANs.

References

Gatys, L.A., Ecker, A.S., Bethge, M.: A neural algorithm of artistic style. arXiv preprint arXiv:1508.06576 2015. link

Ruder, M., Dosovitskiy, A. & Brox,: Artistic Style Transfer for Videos and Spherical Images. T. Int J Comput Vis 126: 1199. 2018. link

Chang Gao, Derun Gu, Fangjun Zhang, Yizhou Yu: ReCoNet: Real-time Coherent Video Style Transfer Network. arXiv preprint arXiv:1807.01197 2018. link